Introduction

Can AI finally match the emotional depth and natural delivery of human voice actors? While traditional Text-to-Speech (TTS) tools have improved dramatically, they've consistently fallen short when it comes to truly understanding the context and emotional nuances of the words they're speaking. Enter Octave by Hume AI—the world's first large language model specifically built for AI Powered Voice Acting, revolutionizing how we think about synthetic voices.

Unlike conventional TTS systems that simply convert text to audio, this new generation of AI actually comprehends the meaning behind the words, allowing it to adjust its delivery dynamically—whispering, expressing sarcasm, or conveying complex emotions as the script demands. With 65% of consumers now preferring audio content over text, AI voice tools are rapidly becoming essential for content creators, podcasters, and businesses alike.

In this comprehensive guide, we'll explore how Octave works, how it compares to traditional TTS platforms, and how you can leverage this groundbreaking technology to create extraordinarily lifelike voice content in 2024 and beyond.

Table of Contents

- What You'll Need

- Understanding LLM-Based Text-to-Speech

- How to Make AI-Powered Voice Acting with Octave

- Secrets the Pros Use

- Comparison with Traditional TTS

- Conclusion

- FAQs

What You'll Need

Free Options:

- Hume AI's Octave: Offers 10,000 free credits upon signup, enough to test the platform thoroughly

- Computer with internet connection: Most modern devices work fine with web-based TTS platforms

- Headphones: Recommended for detecting subtle audio nuances that speakers might miss

- Scripts to test: Prepare varied content with different emotional requirements

Paid Upgrades:

- Octave subscription plans: Start at just $3 per month for additional credits

- Enterprise options: Available for high-volume users and companies needing API access

System Requirements:

- Modern web browser: Chrome, Firefox, or Safari recommended

- Stable internet connection: Required for cloud-based processing

- Basic text editing software: For preparing and refining your scripts

Getting started is simple—just visit the Hume AI website and create an account to access your free credits. The platform runs entirely in your browser, making it accessible across devices without specialized hardware.

How to Make AI-Powered Voice Acting

Step 1: Create Your Voice

- Sign up for Hume AI: Navigate to their website and register for an account to receive your free trial credits.

- Access the playground: After logging in, go to the Text-to-Speech Playground section.

- Prompt your voice: Unlike traditional TTS where you select from pre-made voices, Octave allows you to describe the voice you want in natural language:

Example: "The speaker has a naturally charismatic, slightly nasal and very expressive voice like a beauty blogger from the Midwest who is sharing her latest favorite products and tips." - Test your creation: Generate a short sample to verify the voice matches your expectations before proceeding with longer content.

Step 2: Craft Your Acting Instructions

This is where "Text-to-Speech with LLMs" truly differs from traditional TTS:

- Define the emotional state: Provide specific acting instructions such as "frustrated, emotions boiling, trembles, sad and exhausted."

- Set the delivery style: Add instructions like "clear and easy to understand voice acting from an animated film" or "use dramatic pauses and emphasize sarcasm."

- Be specific but natural: Write instructions as you would to a human actor, not in technical terms.

Step 3: Prepare Your Script

- Avoid parenthetical notes: Unlike traditional scripts, don't include notes like "(voice trembles)" within your text—Octave will read these literally.

- Structure with projects: For longer content, use Octave's project feature to break scripts into sections with different acting instructions.

- Consider the flow: Write with natural speech patterns in mind—Octave performs best with conversational text.

Step 4: Generate and Refine

- Generate your audio: Click the button to process your text through the LLM.

- Listen critically: Pay attention to emotional consistency and delivery across the entire piece.

- Adjust as needed: Modify your acting instructions or voice prompt if the delivery doesn't match your vision.

- Export your creation: Download the final audio for use in your projects.

Secrets the Pros Use

Matching Content to Voice Type

Professional voice directors know that certain script types work better with specific voices. When working with "AI voice generator 2025" technologies:

- Match voice to content: The LLM understands context—"Who Dares enter the cave of Galaxon thee!" sounds better with a monstrous voice than "I love this new setting powder!"

- Script appropriately: Write in a style that matches your chosen voice persona—a wizard should speak differently than a modern teenager.

- Test script segments: Try different emotional instructions with small segments before committing to a full recording.

Creating Consistency Across Longer Content

One challenge with current LLM-based TTS is maintaining voice consistency:

- Use the project feature: Break longer scripts into smaller segments with consistent acting instructions.

- Standardize pitch references: Include a specific pitch range in your voice prompt.

- Consider post-processing: Simple audio editing tools can help standardize pitch variations between segments.

- Use preset voices: For crucial projects, utilize Hume's preset voices which tend to maintain better consistency than custom-prompted ones.

Emotional Intelligence in Voice Design

The most powerful aspect of "LLM for TTS voiceovers" is emotional intelligence:

- Emotional transitions: Unlike traditional TTS, Octave excels at mid-sentence emotional shifts—use this to your advantage in storytelling.

- Leverage contextual understanding: The model understands the meaning behind words, so it naturally emphasizes important phrases.

- Use emotional contrasts: Scripts that transition between emotional states (from calm to angry, or sad to hopeful) showcase LLM's strengths over traditional TTS.

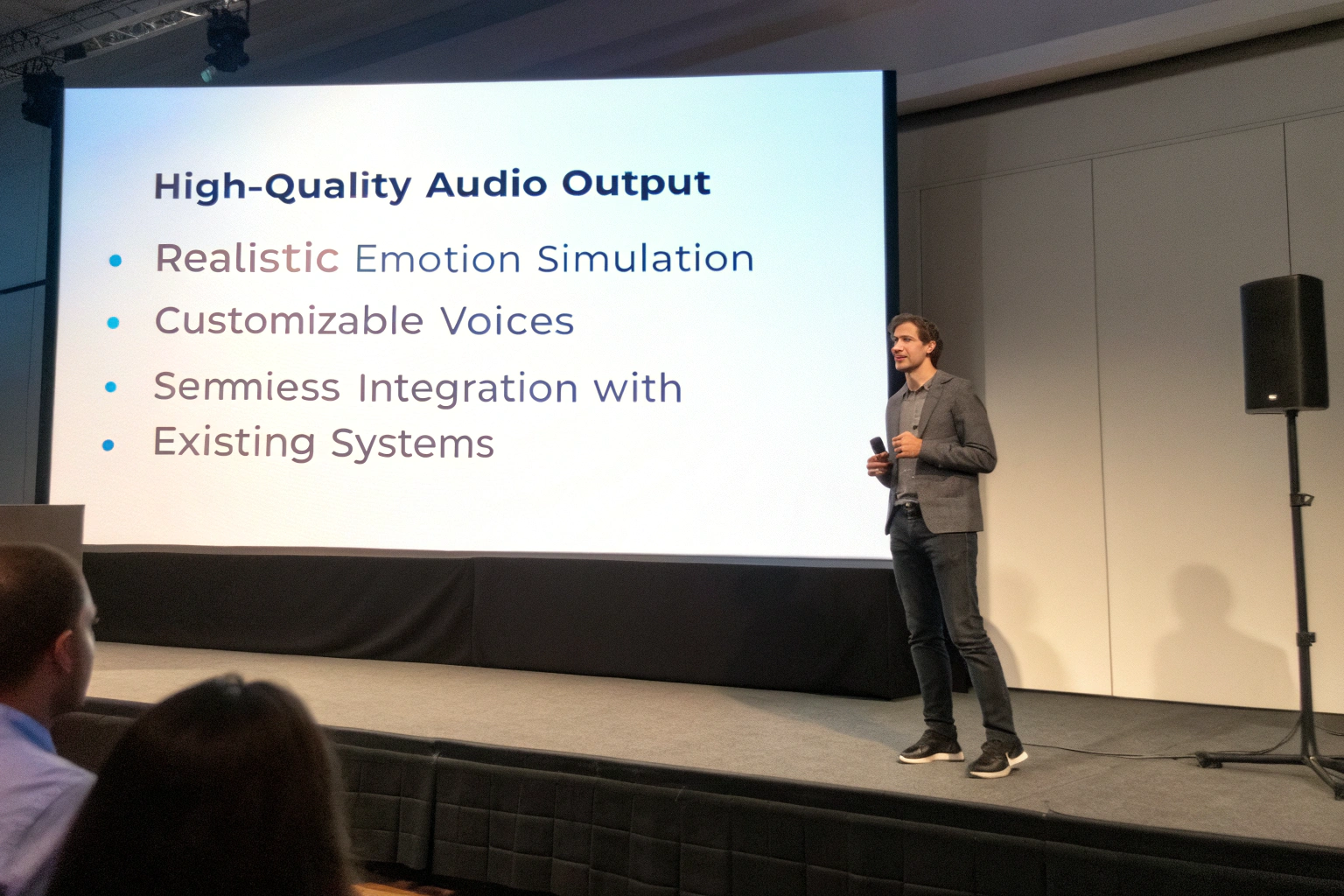

Comparison with Traditional TTS

When comparing Octave to traditional "Best AI voice acting tools" like 11Labs or PlayHT:

Where Octave Outperforms:

- Emotional delivery: According to benchmarks, Octave significantly outperforms traditional TTS in matching emotional descriptions.

- Audio quality: Testing shows superior sound clarity, particularly in emotional passages.

- Context understanding: The LLM actually comprehends what it's saying, leading to more natural emphasis and pacing.

- Pricing: Surprisingly competitive, with plans starting at $3/month—lower than many established TTS providers.

Current Limitations:

- Voice consistency: Traditional TTS maintains more consistent vocal characteristics throughout longer passages.

- Processing speed: LLM-based processing takes slightly longer than conventional TTS.

- Specialized use cases: For simple informational content where emotion isn't crucial, traditional TTS may still be more efficient.

Conclusion

"Realistic AI voice cloning" and emotional synthesis represent the next frontier in digital content creation. Hume AI's Octave demonstrates that LLM-powered TTS is not just a novelty but a significant advancement that brings us closer to truly human-like synthetic voices.

While traditional TTS still maintains advantages in consistency for certain applications, the emotional intelligence and contextual understanding of LLM-based systems make them superior for storytelling, character voicing, and any content where emotional delivery matters.

As this technology continues to evolve—with Hume promising near-term improvements to address consistency issues—we're witnessing the dawn of a new era in voice synthesis. Whether you're creating audiobooks, marketing content, or game characters, AI-powered voice acting offers unprecedented creative possibilities without the cost and logistical challenges of traditional voice talent.

Have you experimented with LLM-based TTS systems? What uses do you envision for this technology? Share your experiences in the comments below!

FAQs

How does LLM-based TTS differ from traditional text-to-speech?

Traditional TTS converts text to speech without truly understanding context, while LLM-based systems like Octave comprehend the meaning behind words, allowing for emotionally appropriate delivery, mid-sentence tone shifts, and natural emphasis patterns that mirror human speech patterns.

Is Hume's Octave suitable for commercial projects?

Yes, Hume AI offers commercial licensing options for all content created on their platform. The pricing is competitive with other TTS services, starting at just $3 per month for basic usage, with enterprise options available for larger projects.

Can Octave clone specific voices?

While Octave excels at creating voices based on detailed descriptions, it's not primarily designed for exact voice cloning. Instead, it creates unique voices based on your prompts. For legal and ethical reasons, attempting to recreate celebrity or specific individuals' voices without permission should be avoided.

How many different voices can I create with Octave?

There's no practical limit to the number of unique voices you can create with Octave. Each voice is generated based on your prompt description, allowing for virtually infinite variations by adjusting your descriptive parameters.

What's the maximum length of audio I can generate?

Octave works best with content broken into manageable segments using the project feature. While there's no hard limit on length, optimal results come from segments under 2-3 minutes, especially when maintaining consistent emotional delivery is important.

Does Octave support languages other than English?

Currently, Octave's primary focus is on English-language content, though Hume AI has indicated plans to expand language support in future updates. For non-English content, traditional TTS systems may still offer better results for now.

How can I fix consistency issues in longer recordings?

For longer content, break your script into segments with similar emotional requirements using the project feature. You can also standardize voice characteristics in your prompt and consider basic audio post-processing to normalize pitch variations between segments.